Fascinating Leap Towards Neuromorphic Computing: A New Era in Tech

In an era where the technology landscape is continuously evolving, neuromorphic computing emerges as a fascinating leap. This new approach to computer architecture mimics the human brain's functionality, revolutionizing the way computers process information. Curious to know more about this cutting-edge technology? Read below.

A Glimpse into Neuromorphic Computing

Neuromorphic computing, an innovative fusion of neuroscience and computer science, is designed to emulate the human brain’s neural system. Unlike traditional computing systems that process data sequentially, neuromorphic computing systems execute multiple computations concurrently, mimicking the brain’s ability to perform multiple tasks at once. This parallel processing capacity enables neuromorphic computers to solve complex computational problems more efficiently and faster than their traditional counterparts.

The Potential of Neuromorphic Computing

The rise of neuromorphic computing heralds exciting possibilities in various fields. In robotics, neuromorphic systems can provide a foundation for developing robots with higher autonomy and better decision-making abilities. In machine learning and artificial intelligence (AI), neuromorphic computing can enhance the efficiency and speed of deep learning algorithms. Furthermore, neuromorphic computing’s power efficiency could significantly reduce the energy consumption of data centers, contributing to greener computing practices.

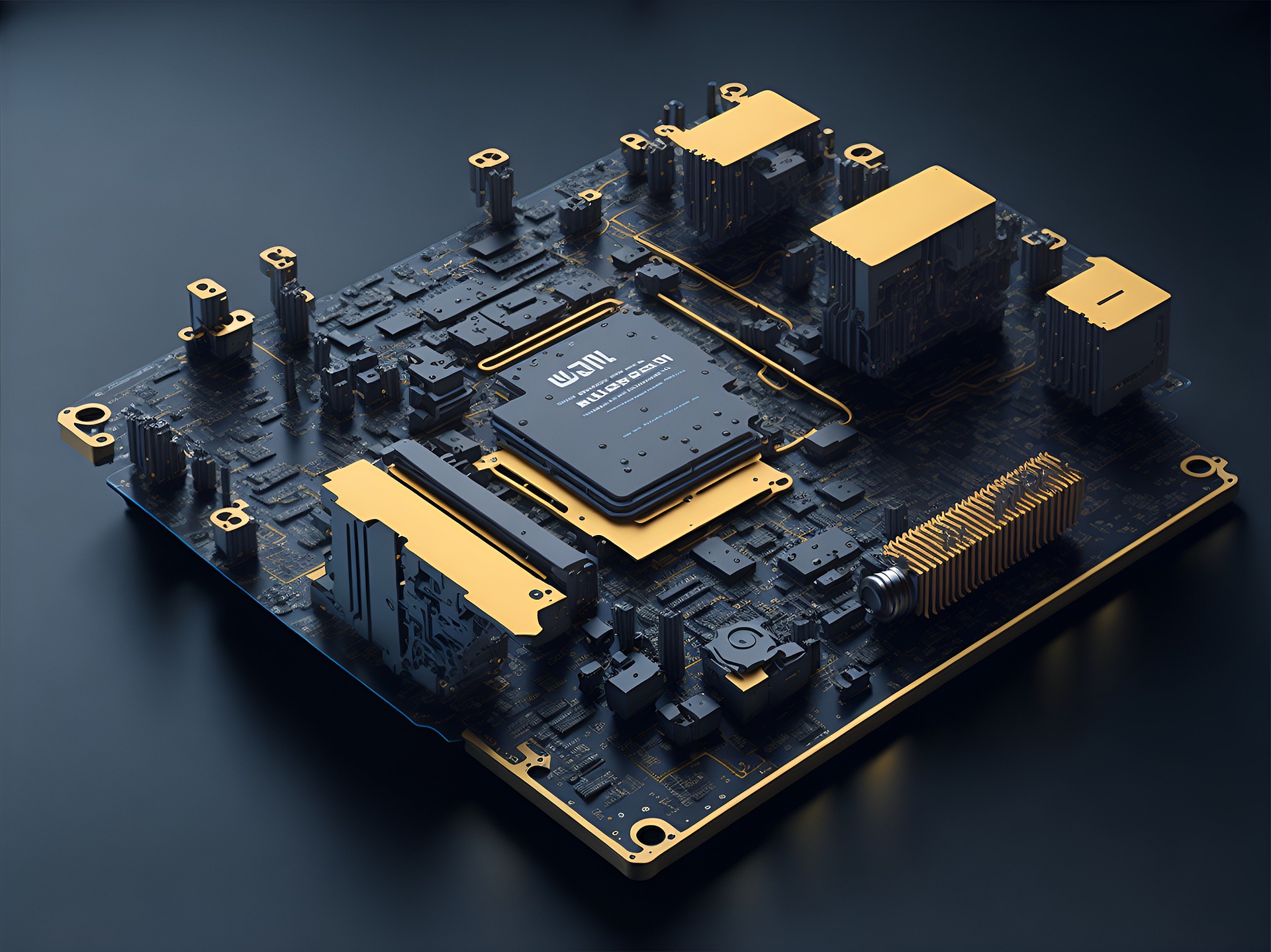

The Role of Neuromorphic Chips

Neuromorphic chips are the heart of neuromorphic computing systems. They are designed to mimic the organization and function of the human brain. Unlike conventional microprocessors, neuromorphic chips incorporate features such as adaptive learning and memory elements into their architecture. Intel’s Loihi and IBM’s TrueNorth are among the leading neuromorphic chips currently under development.

Challenges in Neuromorphic Computing

Despite its potential, neuromorphic computing is not without challenges. One of the main hurdles is the complexity of the human brain itself. Emulating the brain’s intricate structure and functions in silicon is no small feat. Moreover, developing and programming neuromorphic chips requires a unique blend of expertise in computer science, neuroscience, and chip design—skills that are not widely available in the tech industry.

The Future of Neuromorphic Computing

The future of neuromorphic computing holds immense promise. As research and development progress, we can expect to see more practical applications of this technology. Neuromorphic systems could play a crucial role in advancing AI, robotics, and other tech fields, paving the way for breakthroughs that could reshape the digital world as we know it.

Useful Tips and Facts: - Neuromorphic computing is still in its early stages of development but holds enormous potential for revolutionizing various sectors. - Neuromorphic chips like Intel’s Loihi and IBM’s TrueNorth are leading the way in this emerging field. - The field requires a unique blend of expertise in computer science, neuroscience, and chip design.

In conclusion, neuromorphic computing is a fascinating leap towards a new era in technology. By mimicking the human brain’s functionality, it opens up exciting possibilities for AI, robotics, and more. As we continue to explore and develop this cutting-edge technology, we can look forward to a future where computers can process information more efficiently and intelligently than ever before.